Bringing People Together via AI and Automation

Welcome to the hub of innovation: a place to navigate AI-powered automation for collaborative human experiences.

Our educational hub is designed to be your companion as you navigate AI in AV. Check out our A-Z guide to VisionSuite or dive into our blogs and learning on demand, all focused on making AI simple and accessible.

Ready to explore AI in AV? Join one of our upcoming webinars and become a forward-thinker who is shaping the future.

LEARNOn Demand

The A-Z of VisionSuite

Click each letter to learn more

Automatically adjusts a camera’s digital zoom to keep detected faces centred and composed in the frame. This feature adjusts the frame padding as participants join or leave, achieving close-up shots for remote participants.

Act as sensors to enable intelligent switching between active speakers. Based on the vertical and horizontal angle data from these microphones, Q-SYS dynamically triggers and switches between user-defined camera presets.

Understands what's happening in the room and translates it into general understanding. Processes sequences of frames to understand scene dynamics, predict movement, and differentiate between detections.

A range of movement around a presenter’s head within which no reaction occurs. Prevents minor movements from causing unnecessary camera adjustments.

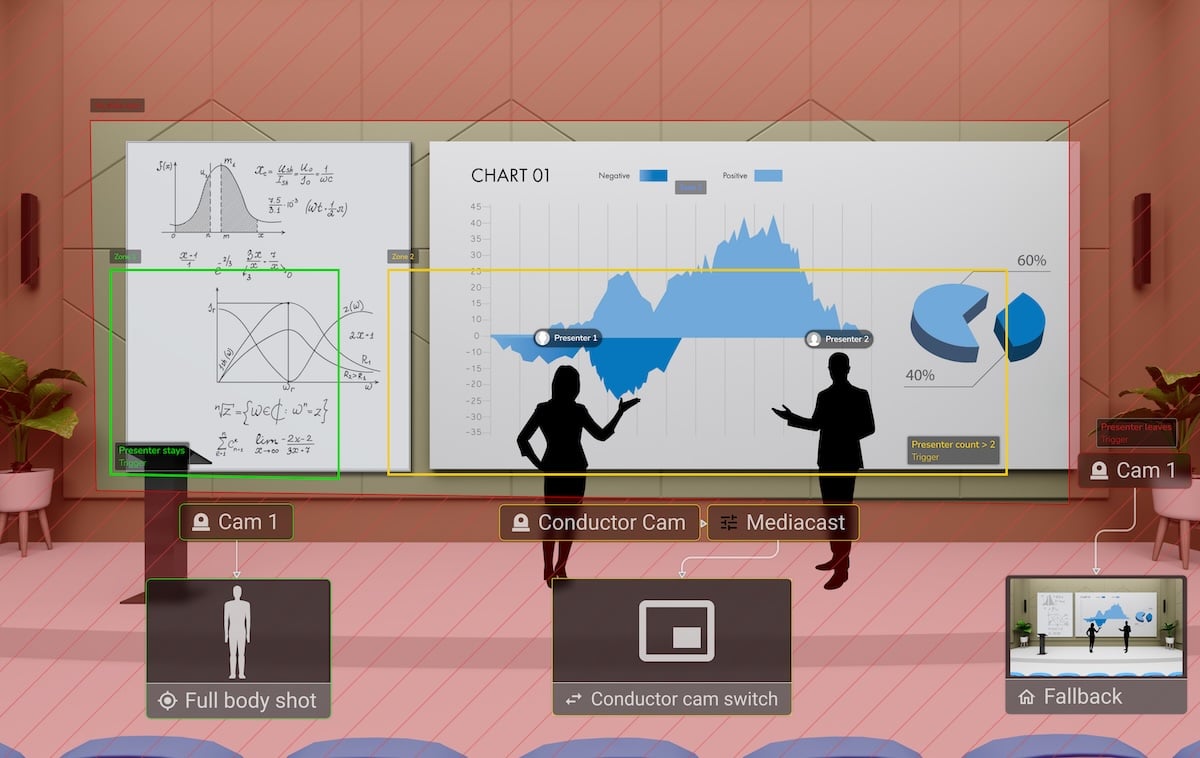

The Seervision AI Accelerator understands the visual environment in real-time, sending and receiving event triggers based on people’s positions on stage. Enables endless room automation possibilities.

VisionSuite analyses more than 20 different reference points all over the body of every person detected. Capable of confidently identifying and tracking a subject even when half of their body is obscured.

VisionSuite identifies multiple presenters and understands group dynamics, triggering different shots based on the number of people on stage and their relative positions

Dynamic, scalable meeting spaces that provide an immersive, engaging experience for in-room and hybrid participants. VisionSuite enables these spaces with elevated requirements for audio, video, and control.

With Q-SYS networked video and intelligent beamforming microphones, customize the hybrid collaboration experience with seamless switching between presenter and audience-facing cameras.

With Trigger Zones, minimise the amount of input required by a presenter to start their presentation. By just walking in, the technology autonomously reacts, instead of presenters having to use touch panels or press any buttons.

Keypoint detection is used to identify specific points of interest in subjects detected, such as joints or facial features to identify their full-body. VisionSuite leverages keypoint detection for precise tracking and analysis of human movements

Our algorithms are tuned to compensate for the delay between the camera capturing an image and our system having extracted the necessary visual information by acting on the predicted movement of the tracked person.

VisionSuite leverages machine learning to continually enhance and improve our models. From labelling to training, the goal is to always optimize the performance of person detection and identification.

Seamlessly integrate NC Series Cameras into Q-SYS systems for easy camera feed routing without complex programming., and deliver these video feeds to Microsoft Teams, Zoom, Google Meet, and Cisco Webex via Q-SYS AV bridging peripherals.

VisionSuite's orchestrated room control coordinates cameras, audio, displays, lighting, and other room elements to create a seamless and optimized collaboration environment.

Short for Pan, Tilt, Zoom. VisionSuite leverages the NC Series PTZ cameras to dynamically adjust camera views, following presenters with smooth motions for the best far-end viewer experience.

Q-SYS Designer is the brain to your VisionSuite system, allowing you to design, configure, and control your entire AV stack.

Imagine an all-hands space where the lights dim, projector screens lower, microphones are unmuted, and cameras zoom in on the presenter the moment they step up to the podium – all without touching a single button. That’s room automation.

The Seervision AI Accelerator leverages computer vision scene analysis to deliver best-in-class camera automation, analysing live video feeds to understand what's happening in the scene.

VisionSuite's tracking capabilities enable cameras to automatically follow presenters, even in challenging conditions thanks to full-body recognition.

Our perception algorithms use a complex structure of facial and body features to uniquely identify the subjects in frame and give each a unique identifier (ID).

Q-SYS VisionSuite is an intelligent audio, video and room automation suite. It improves in-room participant visibility and promotes a natural viewing experience for the far-end, fostering team unity and engagement, regardless of location.

Different Trigger Zones can be placed around a space to trigger different automations. A great use case is the whiteboard switch. Whenever the presenter walks into a whiteboard zone, a camera switch is automatically triggered.

Ok, this only sounds like it starts with an X... exclusion areas enable VisionSuite to ignore detections on set areas, allowing for robust identification of presenters, even when the system detects unwanted subjects on TV screens or windows.

Lock the camera’s y axis (tilt movement) when tracking. This is perfect for when presenters move side-to-side, and you only want to track them on a single plane.

From Trigger Zones to Tracking Zones, zones are everywhere within VisionSuite. Trigger Zones are free-standing virtual zones placed around the presentation area, allowing for custom shots, camera behaviours, or external events to be automatically triggered